7-Week AI Engineering Accelerator 2026

Master AI foundations with 8 comprehensive courses over 7 weeks, designed to build practical skills step-by-step.

Join our Live Training | February 24th through April 9th

Only $24,99 per month billed annually

The primer series is taught LIVE

If you miss the live course, each session is available on-demand the next day

33 coding exercises allow you to learn hands-on skills and improve your learning outcomes.

Build your AI foundation with beginner-friendly on-demand courses in Python, SQL, data literacy, and GenAI.

What You’ll Learn

AI & Machine Learning Foundations

February 24th | 2:00 - 4:30pm ET

This AI course introduces participants to artificial intelligence (AI) and machine learning basics. We will first explore the various types of AI and then progress to understand fundamental concepts such as algorithms, features, and models. We will study the machine learning workflow and how it is used to design, build, and deploy models that can learn from data to make predictions. This will cover model training including supervised, unsupervised learning, and generative AI

Building AI with Coding Assistants

February 26th | 2:00 - 4:30pm ET

This session is your on-ramp to AI-native coding — where natural language replaces boilerplate and speed matters more than syntax. You’ll explore how tools like Lovable, Claude Code and Colab can help you write and reason through Python code, generate functions, and structure real-world AI projects.

Data Prep for Machine Learning and LLMs with Python

March 3rd | 2:00 - 4:30pm ET

Data prep isn’t just the first step in AI Engineering — it’s the one that makes everything else work. This session focuses on building fast, reliable, and scalable data pipelines using Python — with patterns that support both machine learning models and LLM-based applications.

AI Engineering Foundations

March 12th | 2:00 - 4:30pm ET

We’ll cover topics like modular code for ML pipelines, data versioning, model evaluation strategies beyond accuracy, and tools for tracking experiments and model performance. You’ll explore what makes an AI system reliable, observable, and maintainable — and how to structure code and workflows that can grow with your use case.

Introduction to LLMs, Fine‑Tuning & Evaluation

March 19th | 2:00 - 4:30pm ET

This session gives you a working understanding of the tools and patterns behind real-world LLM systems. You’ll explore how large language models process input, how to design effective prompts, and when fine-tuning or parameter-efficient training makes sense for your use case.

You’ll learn how to structure data for LLM workflows, select and evaluate model outputs beyond just accuracy or BLEU scores, and apply key principles for prompt safety, latency, and cost-performance tradeoffs. Hands-on examples will demonstrate how to build and test LLM components using modern frameworks.

Building Knowledge‑Grounded LLMs with RAG

March 26th | 2:00 - 4:30pm ET

Retrieval-Augmented Generation (RAG) is a key architecture for building reliable, context-aware AI systems — and this session shows you how to implement it end to end. You’ll start by understanding when and why RAG is needed to reduce hallucinations and improve factual accuracy in LLM-powered applications.

You’ll then build your own RAG stack: preparing source documents, creating embeddings, storing them in vector databases, and integrating with LLMs using frameworks like LangChain. We’ll cover retrieval strategies, reranking, latency considerations, and prompt chaining — plus hands-on guidance for evaluating RAG output quality.

Automating Workflows with Agentic AI

April 2nd | 2:00 - 4:30pm ET

In this session, you’ll learn how to build real AI agents that take action, make decisions, and collaborate on tasks. We’ll explore the architecture of autonomous agents — including memory, tool use, planning, and routing — and show you how to create intelligent workflows using frameworks like LangGraph, LangFlow, CrewAI, and AG2 (AutoGen 2).

Through hands-on demos, you’ll build multi-agent chains, orchestrate tool use, and design agents that work together to accomplish structured objectives — from research to automation. You’ll also learn how to evaluate agent behavior for stability, reliability, and edge-case handling.

Capstone: Build and Deploy Your Agentic AI Application

April 9th | 2:00 - 4:30pm ET

In this final session, you’ll bring everything together to build and deploy a production-style AI agent — combining RAG pipelines, prompt-engineered LLM calls, and agentic workflows. Using the tools introduced throughout the Accelerator (e.g., CrewAI, LangFlow, and AG2), you’ll design an application that responds to user input with accurate, contextual, and task-aware behavior.

The capstone uses a build-as-you-go approach: each prior workshop contributes to the final system — from data prep and model evaluation to chaining tools and routing agent logic. You’ll walk away with a fully functioning AI application you can showcase or extend: a smart, modular agent built with real-world patterns.

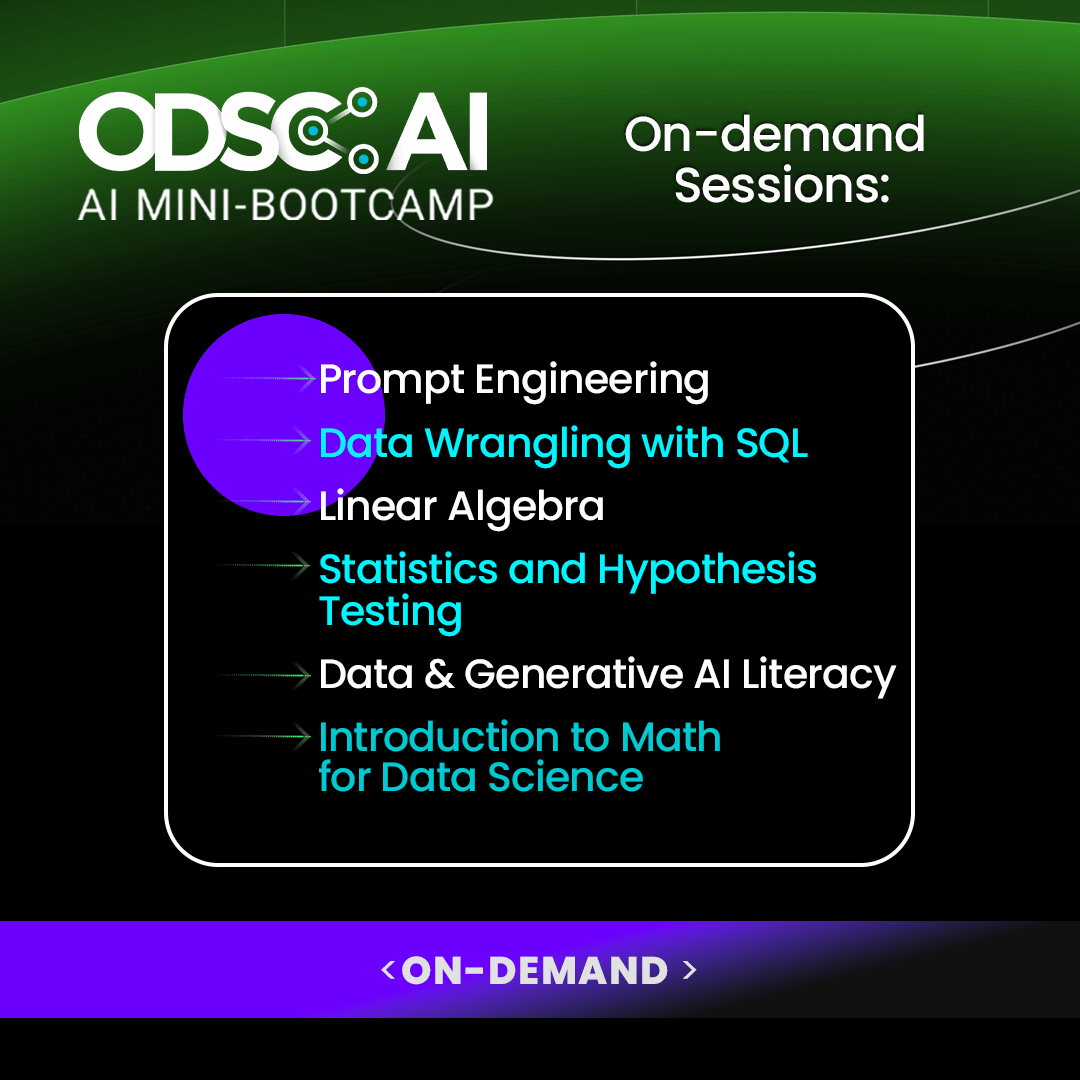

Optional On-Demand Prerequisites:

Founder of ODSC, and Venture Partner/Head of AI at Cortical Ventures

All Courses

.png)

All Courses, AI Agents

All Courses

All Courses

All Courses

All Courses